by Dr Antonio Missiroli

Exponential technological progress, especially in the digital domain, is affecting all realms of life. Emerging mainly from the commercial sector, it has led to a democratisation of technologies that could also be weaponised. Technological developments are also generating new dilemmas about their use by the military.

In September 2017, in a speech to students in Moscow, President Vladimir Putin famously argued that whichever country becomes the leader in artificial intelligence (AI) research – a goal that China has explicitly set itself for 2030 – “will become the ruler of the world”.

A few months later, in his presidential address to the Duma, Putin announced that the testing of Russia’s new hypersonic glide vehicle was complete and production was about to begin (this technology is capable of dramatically reducing the time required to reach a target and loadable with both conventional and nuclear warheads).

In September 2019, Houthi rebels from Yemen claimed the first known coordinated massive swarm drone strike, on two oil production facilities in Saudi Arabia, after defeating Saudi air defence systems.

And, in the latest global crisis over COVID-19, there is additional growing evidence of the disruptive, even subversive effects of psychological and (dis)information operations conducted through social media – not to mention widespread espionage activities based on spear-phishing or even direct cyberattacks against medical care facilities.

In short, at both national and multilateral levels, new and potentially disruptive technologies are dramatically challenging the way deterrence, defence, and more broadly security policies are conceived and carried out.

Technology and warfare

From the Stone Age to Hiroshima, technology has deeply influenced (and sometimes contributed to revolutionising) warfare. In turn, warfare has often boosted technologies later applied to civilian life. Purposeful human manipulation of the material world has virtually always been dual-use – from hunting tools to boats, from explosives to combustion engines, from railroads to satellites – as have platforms like chariots, galleys, mechanised vehicles and aircraft. Science-based engineering has always supported warfare, from fortifications to artillery and from communications to surveillance. However, systematic state-funded research and development (R&D) for military purposes started only during the Second World War and arguably peaked during the Cold War.

Technology harnessed by skilled commanders has always acted as a force multiplier in war, allowing them to inflict more harm on the enemy or limit harm on their side. Throughout history, technological superiority has generally favoured victory but never guaranteed it: comparable adversaries have often managed to match and counter tactical advantages, even within the same conflict, whereas manifestly inferior adversaries have often (and sometimes successfully) adopted ‘asymmetric’ tactics in response. In other words, the value of technology in warfare is always relative to the adversary’s capabilities.1

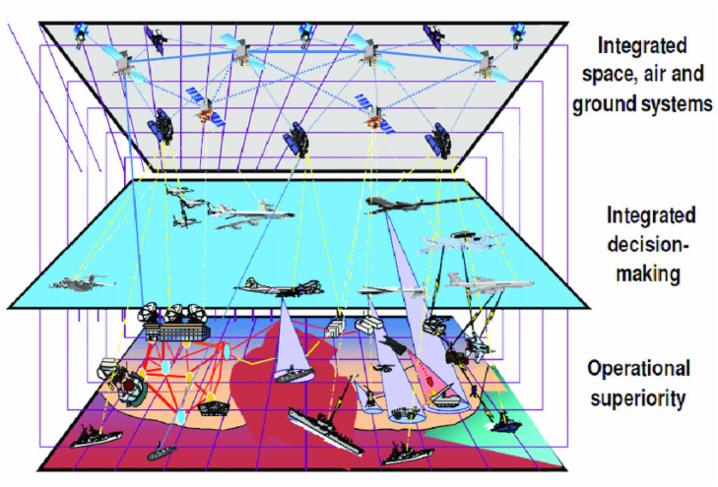

However, what we are experiencing now, at least since the 1990s, is exponential technological progress that is affecting all realms of life – not only, or primarily, the military. In the deterrence and defence realm, the development and application of information communications and technology (ICT), resulting in precision-guided weapons and so-called ‘net-centric’ warfare, was initially conceptualised as another ‘revolution in military affairs’ (RMA). Previous RMAs include the advent of the chariot in antiquity, gunpowder at the dawn of the modern era, mechanised units after the industrial revolution, and nuclear weapons since the Second World War. Yet, it is now evident that ‘net-centric’ warfare, while developing at a fast pace, is probably more an evolutionary and incremental process of transformation than a revolution in its own right. Nevertheless, it still has largely unpredictable implications for deterrence, defence and security at large.

Just like previous (r)evolutions, the current one is expected to alter dramatically the global balance(s) of power – not only between empires, city-states or nation-states, as in the past, but also within and across actors as, for instance, big tech companies begin to cultivate power and even status often associated with statehood. The 21st century has in fact seen a unique acceleration of technological development – thanks essentially to the commercial sector and especially in the digital domain – creating an increasingly dense network of almost real-time connectivity in all areas of social activity that is unprecedented in scale and pace. As a result, new technologies that are readily available, cleverly employed and combined together offer both state and non-state actors a large spectrum of new tools to inflict damage and disruption above and beyond what was imaginable a few decades ago, not only on traditionally superior military forces on the battlefield, but also on civilian populations and critical infrastructure.

Moreover, most of these technologies – with the possible exception of stealth and hypersonic systems –emanate from an ecosystem fundamentally different from the traditional defence industrial model or ‘complex’, based on top-down long-term capability planning and development, oligopolistic supply (a small number of sellers given to non-price competition) and monopsonistic demand (a single buyer). Accordingly, in the past, military R&D resulted in technology – such as radars, jet engines or nuclear power – that was later adapted and commercialised for civilian use.2 By contrast, these new technologies are being developed from the bottom up and with an extremely short time from development to market: only after hitting millions of consumers worldwide and creating network effects do they become dual-use, and thus ‘weaponisable’.

The vector of dual-use innovation has significantly shifted, with spillover and spin-off effects stemming primarily from the civil realm. Investment in science and technology (S&T) is now mainly driven by commercial markets, both nationally and globally, and the scale of its expenditure dwarfs defence-specific S&T spending, giving rise to technology areas where defence relies completely on civil and market developments. The new superpowers (and ‘super-influencers’) are the private big tech consumer giants from the West Coast of the United States and mainland China.

Remote control and lack of control

The latest technological breakthroughs have fostered in particular the development and democratisation of so-called ‘standoff’ weapons, that is, armed devices which may be launched at a distance sufficient to allow attacking personnel to evade defensive fire from the target area. Delegation and outsourcing of military functions to auxiliaries, mercenaries, privateers, insurgents or contractors – recently labeled as ‘surrogate warfare’ – is nothing new, of course. But these new technologies are challenging the underlying trade-offs between delegation and control, and generating new dilemmas by making it possible to operate unmanned platforms from a distance, first for reconnaissance and surveillance, then also for punishment and decapitation missions. While they do not represent the first application of a machine as a proxy in warfare (cruise missiles served a similar purpose), these new weapons are also providing an incomparable degree of discretion (low visibility, also domestically) and deniability, especially before the international community.3

Most importantly, some are now easily accessible on commercial markets and relatively simple to operate, further breaking the traditional monopoly of states over weaponry and the legitimate use of force and opening up new ‘spaces’ for new types of warfare. They have already been employed in (counter-)terrorism and (counter-)insurgency operations overseas but could easily be deployed in urban environments – and potentially loaded with chemical, biological or radiological agents. In fact, access and intent are crucial in all these cases, lowering the barrier for their use and widening their scope.

For their part, cyberspace-based weapons – when used for sabotage (cyberattacks) and subversion (disinformation and destabilisation campaigns) rather than espionage – go even further in coercing and disrupting while preserving discretion and deniability, as they operate in a purely man-made and poorly regulated environment that relies entirely on technology to work. Digital weapons can indeed achieve strategic effects comparable to warfare without resorting to direct physical violence, whereas most experts consider cyber ‘war’ in a narrow sense to be a far-fetched scenario. As opposed to nuclear weapons, digital weapons are not for deterrence but for actual and even constant use, and they can be operated by states as well as proxies and private organisations without geographic or jurisdictional constraints: attribution is difficult and retribution risky.4

The media space has become an additional battlefield, constituting as it now does a transnational global public sphere where perceptions of right and wrong, victory and defeat (so-called ‘audience effects’)5 are shaped and consolidated at lightning speed. Social media may not have been militarised – although servicemen do use them too, making them vulnerable to hostile campaigns – but have certainly been weaponised. ‘Open source warfare’ is the name of this new game,6 in which individual citizens and consumers often act as more or less unwitting auxiliaries. In fact, while cyber-enabled sabotage requires high levels of know-how but relatively little manpower, cyber-enabled subversion is much simpler to design but requires a critical mass of users to spread narratives. The combination of all these technologies in a comprehensive strategy with tactical variations has been conceptualised as ‘hybrid’ warfare – or, when it remains below the level of armed conflict, just malicious activity.7

Finally, at least so far, space proper has remained relatively immune from these trends, thanks both to the provisions of the 1967 Outer Space Treaty and to the risks intrinsically associated with the possible use of force, for example, debris. Technological developments up there have been focused on facilitating activity down here – mainly satellite communications for broadcasting and navigation – for both public and now increasingly also private actors, with all the resulting democratisation effects. The most capable states have indeed partially militarised space, and now ever more states (also thanks to technological change) are capable of entering the game. Yet while there are no well-tested protocols or rules of engagement for military activity up there, the weaponisation of space-based assets still seems an unlikely scenario.8

Intelligent machines and their scope

Enter artificial intelligence (AI), machine learning and autonomy (quantum computing may still lie a bit farther on the horizon, although it may prove no less disruptive). The concept of AI dates back to the early 1950s but technological progress was very slow until the past decade. Then three main changes occurred: the miniaturisation of processors boosted computing power; the spread of mobile and connected devices favoured the generation of an enormous amount of data; and, finally, the application of new types of algorithms exploiting leaps forward in machine learning (and in particular neural networks) increased the overall capabilities of machines.9

In the domain of public health and diagnostics, such as cancer research, these technological developments are already proving their worth and their benefits are uncontested. In the field of security and defence, however, the jury is still out: the prospect of fully autonomous weapon systems, in particular, has raised a number of ethical, legal and operational concerns.

‘Autonomy’ in weapon systems is a contested concept at international level, subject to different interpretations of its levels of acceptability. The resulting debate triggered, among other things, the establishment of a group of governmental experts on Lethal Autonomous Weapon Systems (LAWS) at the United Nations in 2016. However, this group has not yet come to agreed conclusions. This is in part due to the current strategic landscape and the ‘geopolitics’ of technology, whereby some states developing these systems have no interest in putting regulations in place, while they believe they can still gain a comparative advantage over others. Yet it is also due to the fact that ‘autonomy’ is a relative concept.

Few analysts would contest that, in a compromised tactical environment, some level of autonomy is crucial for an unmanned platform to remain a viable operational tool. Moreover, automatic weapon systems have long existed (for example, landmines) and automated systems are already being used for civilian and force protection purposes, from Israel’s Iron Dome missile defence system to sensor-based artillery on warships. With very few exceptions, current weapon systems are at best semi-autonomous. Moreover, they tend to be extremely expensive and thus hardly expendable.

Technological and operational factors still limit the possible use of LAWS: while engaging targets is getting ever easier, the risk of miscalculation, escalatory effects and lack of accountability – all potentially challenging established international norms and laws of armed conflict – seem to favour meaningful human control. Yet the temptation to exploit a temporary technological advantage through a first strike also remains, and not all relevant actors may play by the same ethical and legal rules.

In addition, beyond the traditional military domain, recent spectacular breakthroughs in voice and face recognition (heavily reliant on AI) may further encourage subversion, while the design of ever more sophisticated adaptive malware may promote more sabotage. Those states who can determine the infrastructure and standards behind such activities will gain a strategic advantage.

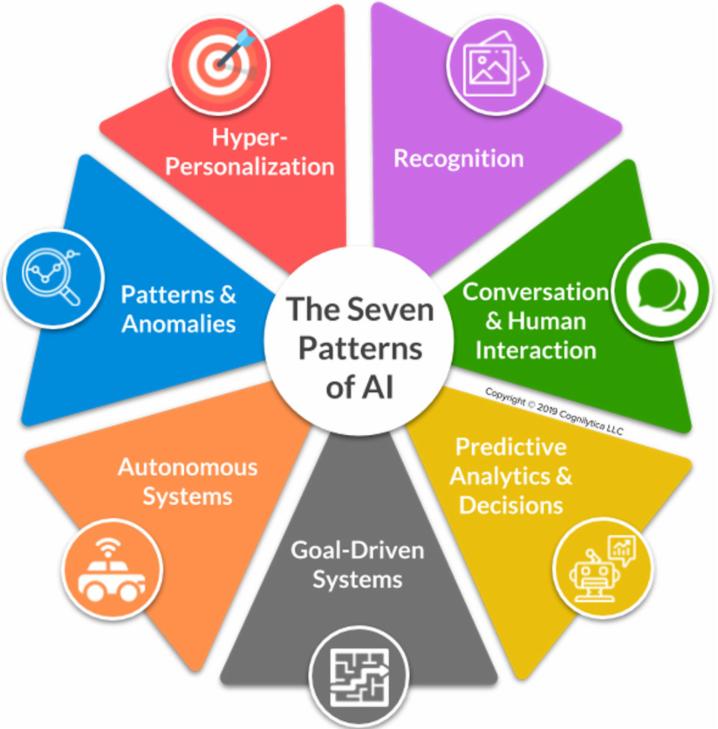

However, mirroring what already happens in medical research, AI can also be used for detection, pattern recognition and simulation purposes – all potentially crucial in the domains of counter-terrorism, civil protection and disaster response as well as arms control (monitoring and verification). Tailored AI applications can indeed provide better intelligence, situational awareness, analysis and, arguably, decision-making. AI can also be used for practical applications already common in the business sector, like more efficient logistics or predictive maintenance for equipment, which all play a very important role in the military.

Furthermore, most experts highlight an important distinction within the AI domain. Accordingly, narrow AI refers to single-purpose systems, that is, machines that may perform unique tasks extremely well in one realm but are almost useless in unfamiliar scenarios or for other applications. By contrast, general AI refers to the capability to conduct a plurality of multidimensional activities without being explicitly programmed and trained to do so – a capability that is believed to be still a long way off.

For the foreseeable future, the range of potential military applications of ‘narrow’ AI appears quite attractive. However, investments will depend on the readiness to take financial risks with limited public budgets and will probably be weighed against other modernisation and operational priorities. Here, too, technology can be both a boon and a bane, and it is not unlikely that the historical pattern whereby labour-replacing technologies encounter more opposition than enabling ones will be replicated.10

In the past, international efforts to control the proliferation, production, development or deployment of certain military technologies (from chemical, biological, radiological and nuclear agents to landmines, from blinding lasers to missile defence systems) were all, to various degrees, driven by four distinct but potentially overlapping rationales: ethics, legality, stability and safety. The possible military use of AI has raised concerns on all four grounds. In the past, again, apparently inevitable arms races in those new fields have been slowed or even halted through some institutionalisation of norms – mostly achieved after those technologies had reached a certain degree of maturity and often advocated, inspired and even drafted by communities of relevant experts (from government and/or academia).

As a general-purpose technology, however, AI is quite peculiar, and so are the expert communities involved in its development and applications.11 Yet it is encouraging to note that a significant number of countries (for instance, in the framework of the Organisation for Economic Co-operation and Development) and companies like IBM, Microsoft and Google have recently come forward to advocate a shared code of conduct for AI – or even publicly articulated own principles, as has the Pentagon, for example – especially regarding its military and ethical ramifications.

In other words, the risk of an arms race in these emerging technologies undeniably exists, along with a more general concern – expressed by the likes of Henry Kissinger, Stephen Hawking and Elon Musk – about possible unintended consequences of an indiscriminate use of AI. Yet so does the hope that such technologies may still be channelled into less disruptive applications and end up in the same category as poison gas or anti-satellite weapons – in which the most powerful states will abstain from attacking each other, at least militarily, while weaker states or non-state actors may still attack but to little effect.

Leave a Reply